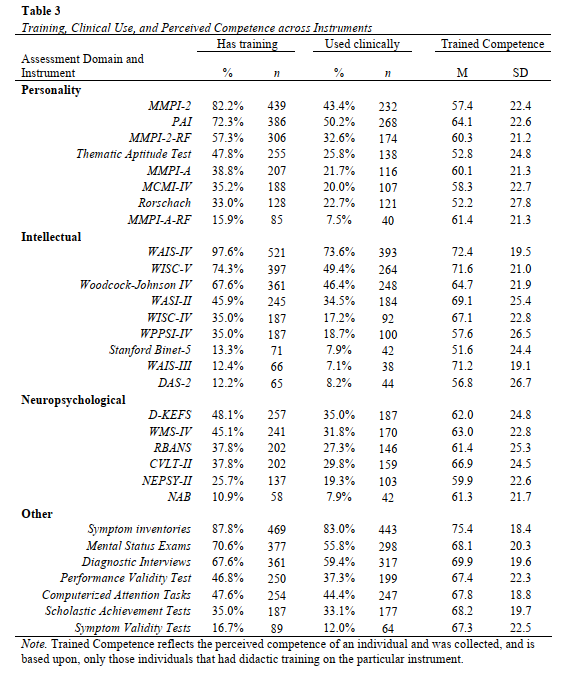

Although the PAI is similarly trained and used as the MMPI (Wright et al., 2017; Ingram et al., 2020), it’s validity scales don’t have the same level of study or rate of detection for invalid responding within military populations (see Brittney’s thesis as an example). In fact, even when new validity scales are developed they rarely see themselves assessed beyond this initial validation

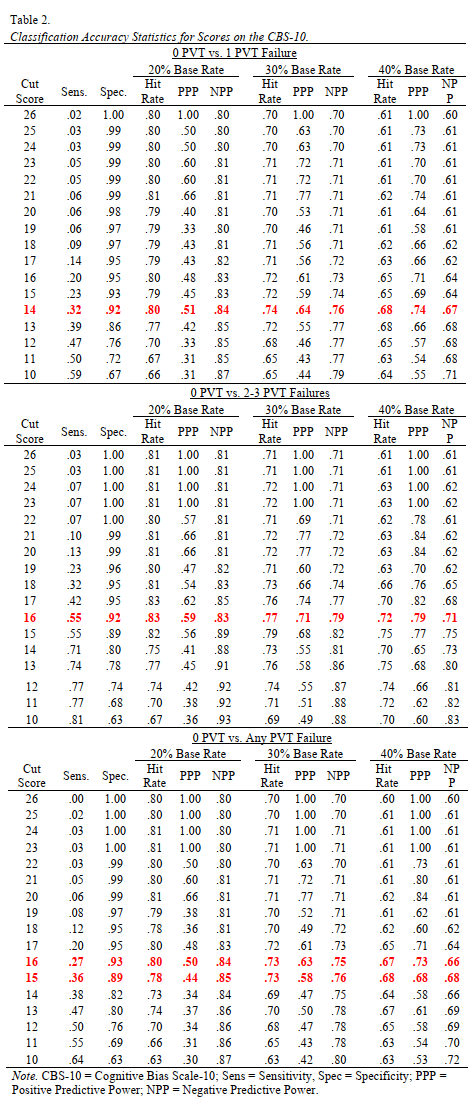

Gaasedelen et al (2019) developed the Cognitive Bias Scale (CBS) as a comparative measure to the MMPI-2-RF’s highly effective Response Bias Scale (RBS). The RBS employed criterion coding using PVT failure to identify potentially useful items and as a result of these methods some consider it the most reliable MMPI-2-RF validity scale given its blended SVT/PVT approach (Ingram & Ternes, 2016). The new CBS scale used the same methods to develop a scale assessing feigned cognitive symptoms on the PAI, a domain of symptoms that was previously overlooked. In our most recent study, we replicated their validation in an active duty military sample to see if their suggested cut scores and observations of effectiveness were generalizable.

What did we find?

- Large effect sizes differentiated scores on the CBS between those passing PVTs and failing PVTs

- The CBS had similarly high specificity and low sensitivity as the initial CBS, and comparable to those observed in the RBS for the MMPI-2-RF

- In general, a cut score of 16 is recommended to maximize specificity while also keeping moderate sensitivity

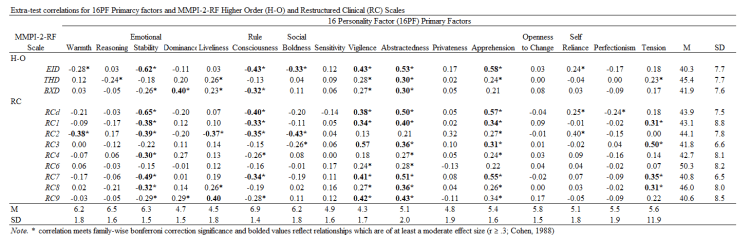

Check out the table below for classification accuracy information (red bolded text are recommended values for each comparison [>.9 spec, ~.3 Sens]):